A histogram is a special type of column statistic that provides more detailed information about the data distribution in a table column.

Purpose of Histograms:

By default the optimizer assumes a uniform distribution of rows across the distinct values in a column. For columns that contain data skew (a nonuniform distribution of data within the column), a histogram enables the optimizer to generate accurate cardinality estimates for filter and join predicates that involve these columns.

For example, a California-based book store ships 95% of the books to California, 4% to Oregon, and 1% to Nevada. The book orders table has 300,000 rows. A table column stores the state to which orders are shipped. A user queries the number of books shipped to Oregon. Without a histogram, the optimizer assumes an even distribution of 300000/3 (the NDV is 3), estimating cardinality at 100,000 rows. With this estimate, the optimizer chooses a full table scan. With a histogram, the optimizer calculates that 4% of the books are shipped to Oregon, and chooses an index scan.

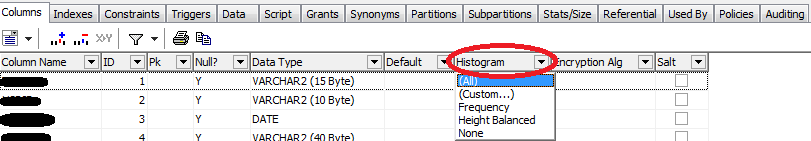

Three types of Histograms are there

- Frequency histograms and top frequency histograms

- Height-Balanced histograms (legacy)

- Hybrid histograms

Source: Oracle.com